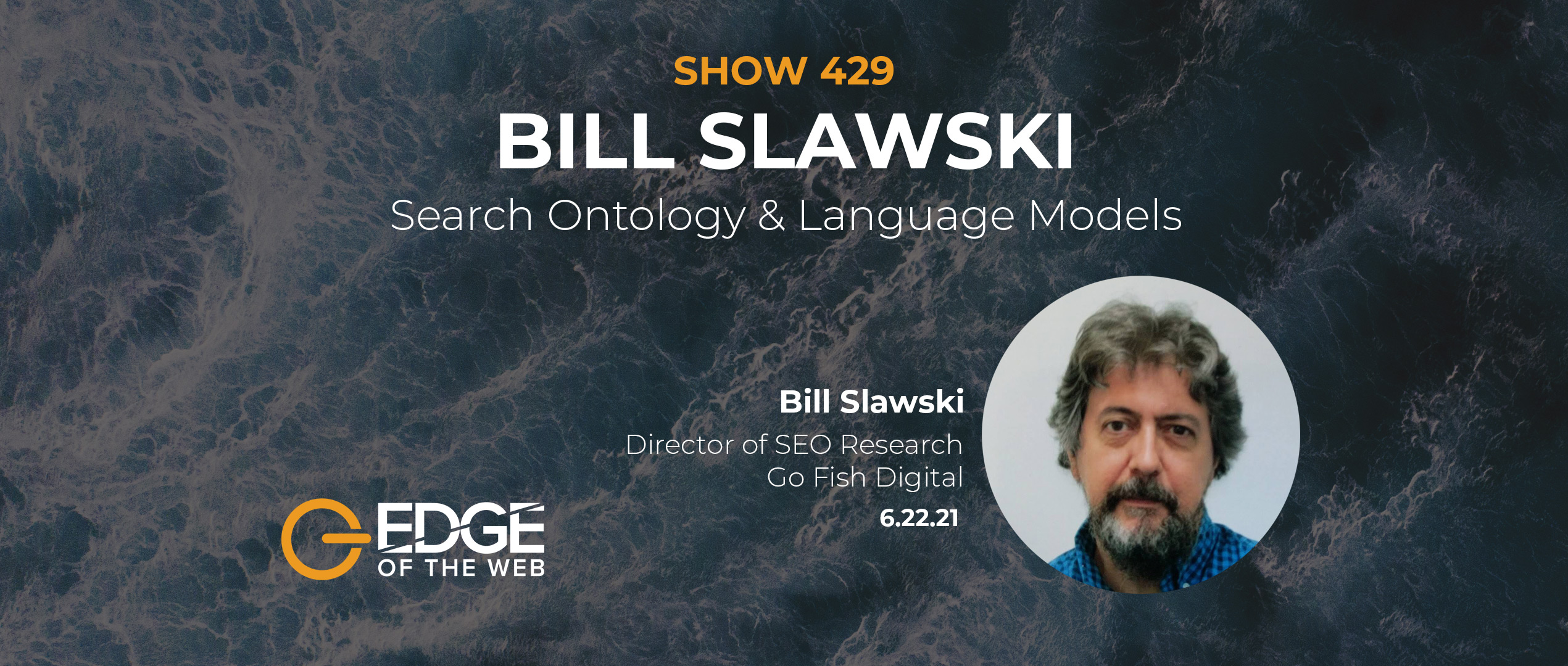

Bill Slawski is currently the Director of SEO Research at Go Fish Digital. He has previously worked as a solo consultant. Slawski has been involved in internet marketing and web promotions since 1996. He has written over 1,000 posts on his site, SEO by the Sea. Having worked with a wide range of sites, Slawski has experience working with nonprofits to Fortune 500 companies. He is also known for his research related to patent findings from search engines. Slawski is an accomplished contributing writer and has spoken at several SEO industry conferences. He provides a guide into the new horizon of machine learning and natural language processing in the realm of entities.

- [00:03:59] When Bill got started in patent sleuthing

- [00:07:27] Tracking the authors of the patents, like authors of good books

- [00:13:40] Tying it all together: “You shall know a word by the company it keeps”

- [00:19:20] Tying entities into search can be easier that SEO

- [00:22:57] The weight and impact of taxonomies and ontologies

- [00:26:36] The language models that Google is building now

- [00:28:36] Latent Semantic Indexing is a misnomer

Tracking Authors of Patents

Slawski began investigating Google patents after giving a presentation in 2004-2005 where he mentioned a paper that he co-authored that looked at ways of determining whether something is spam or not. People who were spamming websites would buy domains and put sub-domains. Slawski would count the number of hyphens in a sub-domain name, determining the probability of it being spam. Through research, it was determined that Google uses natural language processing pretraining language models, models that Google is not forthcoming with regarding launching times.

Google’s lack of opacity when launching these things led to what Slawski investigates. There is a continual buildup of google patents submitted, which is not one sizable thing that encompasses algorithm changes. Instead, it’s broken apart into different facets focused on diff concepts of entities and interrelationships of data. One patent he recently came across was using machine learning to rank pages. Slawski equates the search and investigation of specific patent authors to search for your favorite author in a bookstore. He tries to find these people to understand what they mean by what they say.

Understanding The Importance of Context

Words change the meaning, and there are different ways that words can be interpreted. Context terms are getting indexed and providing Google with what the page is about. The concept here is about how well you can give context to subject matter instead of focusing strictly on keywords. The key is understanding context and understanding how google understands context, which Slawski has been homing in on.

The number of times a word appears in a document will determine how relevant it is to that page. Phrase-based indexing allows for you to look at whether there are related patents. This shows how likely it is that a patent has been granted. Through this, the inverted index for phrases predicts what the page’s content is about based on certain phrases that appear. Relating this to the importance of context, Google plays with language models and sees how words work together. It’s stepping away from concepts that don’t have a root association but are more contextual. When using certain words, other words will likely appear on that same page.

Connecting Entities with Search: Easier Than SEO?

The SEO industry is attempting to define content as relevant. Therefore, a search engine marketer needs to understand the mapping of the contextual mind. However, Google is not giving us the map of what it’s understanding. The question is: Is there going to be an evolution of research around the topic, not just about the topic itself? Slawski recounts a client of his who was trying to rent an apartment in their complex. He found that their problem was that their website did not tell you much about the complex. Once Slawski incorporated more context, the page was much more successful. This anecdote is used to show that there is a necessity to tie in connections between concepts and entities.

This relationship between concept and entity is helpful for the consumer and content marketing because it ties in pattern recognition. If you match a relationship between a concept and entity enough, Google will favor you as a resource because it is making those connections for the user. It can be easier to exhibit the interrelationship between concept and entity than to focus solely on SEO.

Explaining Taxonomies and Ontology

There are two players when it comes to searching: taxonomies and ontology. To put it simply, taxonomy is a way of organizing content into categories. Broader content and content that is more specific is organized at different levels. Slawski equates this to the way a car can be broken down into different systems. You understand where all the different parts are and how they connect at varying levels with a car. Taxonomy is not just the structure of a website but is the organization and navigation of the organization. Ontology is more complex because it tells you how things are related. Its focus is on relationships. To Google, knowledge, and relationships are synonymous. Content concerning ontology is not just filing it down to understand it; it is mapping the relationships, which is what Google’s knowledge panel is trying to understand. If you can map the relationships between content and the outside world’s value, then you start building a relationship model that Google can rely on.

Language Models

Google’s language models are going over content from pages and reading them with the knowledge it has been trained with. Language models can recognize entities and pinpoint them. They search for proper nouns, places, people, and things and fact patterns related to those things. The language models are creating what is called “triples.” Triples are a subject, verb, and object. Google’s knowledge graphs show reasons why things are connected to other things. Content strategists can fill those gaps to reinforce those connections.

Where Does Latent Semantic Indexing Come into Play?

Latent semantic indexing was developed in pre-web days. As it exists now, the web changes the way people structure content, and the web does not stay the same for very long. Therefore, in terms of taxonomy and ontology, latent semantic indexing is inadequate due to its need for constant reengineering and the inability to feed the actual machine learning. In conclusion, odds are Google does not use the original latent semantic indexing.